Stopping Crime with Digital Advantage

Nayar Prize II Finalists Incorporate Computerized Data Analysis to Prevent Crime

Illinois Institute of Technology researchers are working hand-in-hand with the Elgin Police Department to thread a delicate needle of using an algorithm to prevent crime while respecting individual rights.

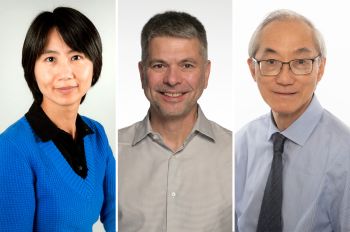

The research team of Miles Wernick, Motorola Endowed Chair Professor of Electrical and Computer Engineering, director of the Medical Imaging Research Center, and professor of biomedical engineering; Lori Andrews, Distinguished Professor of Law and director of the Institute for Science, Law, and Technology at Chicago-Kent College of Law; and Yongyi Yang, Harris Perlstein Professor of Electrical and Computer Engineering and professor of biomedical engineering, was named the Nayar Prize II finalist for its Data-Driven Crime Prevention Program.

“We’re trying to create a blueprint to allow small- and medium-sized cities to approach crime prevention from a perspective that is proactive and focuses on providing social services,” Wernick says. “In a city of any significant size, there’s a lot going on, so it’s difficult for social workers to know where to apply their resources best.”

Often social workers wait for people to seek them out for services, Wernick says. The research team is trying to give social workers an informational tool to identify people who may be in need of assistance.

“[The algorithm] analyzes recent crime data from crime incidents,” Wernick says. “From that, it estimates an individual’s risk of being involved in serious crimes. Based on that, it provides recommendations to social workers, which they can use as they see fit.”

“The notion isn’t a one-size-fits-all in terms of potential intervention,” Andrews says. “It really is about working with the individual to try to figure out what that individual needs most. Some say they need rehab, others want a GED or they need work training.”

All the information collected by the algorithm comes from incident and arrest records filed by police officers in the field. By tapping into that information, the algorithm can identify people who may be at greater risk of being a future victim of crime, or who may be a future perpetrator, although the algorithm does not differentiate between the two.

“It turns out [differentiation] is very difficult, because the people most heavily involved in crime usually have both things happen,” Wernick says. “Surprisingly, many people in this category are homeless. Homeless people are often getting attacked and then getting into trouble themselves.”

The researchers say they are very diligent about incorporating a legal and ethical framework, as well as respecting individual rights, while building the algorithm. It is why they insist on using only public information and being transparent about the data’s origins in the police department.

“We’re not collecting any new information,” Wernick insists. “They already have this information.”

“We are not gathering information on people like some smart phone apps do,” Yang says. “It’s nothing like that. The computerized output is to be used only as a second opinion. You can look at this output, but there still will be a social worker who decides what do with it.”

Andrews adds that the data collection does not go beyond the police database of filed reports. It does not delve into social media accounts, for example, to find corroborating evidence. Nor is it to be used for punitive measures such as by a judge while considering sentencing, or by parole boards.

The researchers also say they have been vigilant in eliminating biases in the algorithm. No information on race or ZIP codes, for example, is included.

“We’re not using variables that might infringe on rights of free speech or privacy or association,” Andrews says. “That has been where other police departments have gotten into trouble.”

All of this information is analyzed not to generate a list of individuals, but rather to help social workers identify people who may not otherwise come to their attention.

“It might be relatively obvious there is a particular gang member who is getting into all sorts of trouble,” Wernick says, hypothetically. “But it can be there is a person with mental illness, who, over a period of time, is getting off their medication and then repeatedly something bad happens. It may not be the sort of person they would think is naturally a high-crime person, and yet something is going on there. If there is a way to reach out and help this person and offer them opportunities, it could be beneficial.”

The researchers believe the algorithm will identify a small number of people. So far, 7,000 criminal incidents have been analyzed involving 22,000 people as either perpetrators or victims. According to the data approximately 200 individuals, or fewer than 0.2 percent of the Elgin population, have been involved in 30 percent of total crime severity.

Being able to identify a small number of people might make it more palatable to a community to support social services—especially if residents can see what impact it can make.

“If you help one person, typically that person has a family,” Wernick says. “That can help the family and neighbors and friends. And if you’re talking about a small number of people, you may, through the indirect benefit, address a large percent of the crime in a small- or mid-sized community.”

The researchers say the Elgin Police Department stood out from numerous law-enforcement agencies eager to incorporate algorithms into crime prevention due to its progressive culture within the department and that it has its own social work unit.

“I think it illustrates their approach to crime prevention,” Wernick says of a police department including a social work unit. “They’re very much community-oriented. So I felt this would be a good partner who would understand what we are trying to do.”

As a result, Wernick says the team has found a partner with which a blueprint can be produced and replicated in order to make the societal impact outlined in the Nayar Prize goals.

“If a program is successful, it serves as a model for others to follow,” Wernick says. “We wanted to conduct, under the Nayar funding, a pilot study in which we would work with these experts in social services to come up with a model or blueprint that could stand as an example. They would know the dos and don’ts and here’s how to go about creating the tool.”